Report b2b web sites fail usage test highlights the critical issues plaguing many businesses today. Websites are the storefront of the modern business, yet many fall short when rigorously tested. This report delves into the reasons why B2B sites frequently fail these crucial usage tests, examining various aspects, from technical glitches to user experience flaws.

Understanding these failures is paramount to ensuring a positive customer experience, fostering brand loyalty, and driving revenue. This report provides a comprehensive overview, including defining failure, identifying root causes, analyzing user behavior, and exploring technical aspects, all culminating in actionable strategies for mitigation.

Defining the Failure

B2B website usage tests are crucial for identifying and rectifying potential issues before they impact real-world performance. A comprehensive understanding of what constitutes a “failure” in this context is essential. This involves recognizing various metrics and thresholds that signal problems, encompassing everything from slow loading times to more subtle user experience flaws. Ultimately, the goal is to pinpoint areas where the website doesn’t meet expected standards and thus isn’t optimally serving its intended purpose.A failure in a B2B website usage test isn’t simply a single event but rather a measurable deviation from acceptable performance standards.

It’s a composite of multiple factors, from technical glitches to user experience shortcomings. Pinpointing these failures enables targeted improvements and ensures the website consistently delivers a positive and effective experience for potential clients.

Defining B2B Website Failure Metrics

B2B websites face unique challenges compared to consumer-focused sites. Their visitors often have specific needs and expectations, and performance is critical for establishing trust and credibility. Identifying and quantifying these failures requires a structured approach, considering both technical and user-centric metrics.

Types of B2B Website Failures

Various types of failures can affect a B2B website. These include:

- Slow Loading Times: Excessively long page load times frustrate users, impacting their perception of the site’s responsiveness and reliability. This is particularly crucial for B2B sites where users might be evaluating the company’s overall capabilities. A slow-loading website could signal inefficiency or lack of technical prowess.

- Broken Links: Broken or malfunctioning links create a frustrating and unprofessional experience. Users may become disoriented and lose confidence in the site’s navigation, which is detrimental for a B2B site attempting to project a professional image.

- Poor User Experience (UX): A poorly designed or confusing interface can significantly impact user satisfaction. For B2B websites, this often manifests as difficulty navigating the site, locating specific information, or completing a purchase. This can lead to lost leads and potential customers.

- Technical Glitches: Unexpected errors, crashes, or malfunctions during the testing process indicate underlying technical issues. These can range from server errors to application glitches, and can cause major disruptions to user experience.

Expected vs. Unexpected Website Failures

Differentiating between expected and unexpected failures is critical for effective troubleshooting. Expected failures are often anticipated based on the design, architecture, and functionality of the site. For example, a specific page might load slowly under a certain volume of traffic.Unexpected failures, however, represent unforeseen issues or performance degradation that deviate from established patterns or predictions. These issues can arise from unforeseen circumstances, software bugs, or security vulnerabilities.

Key Performance Indicators (KPIs) Defining B2B Website Failure

The following table illustrates key performance indicators (KPIs) and their corresponding acceptable and exceeding ranges, indicating failure in a B2B website usage test.

| KPI | Acceptable Range | Exceeding Range (Failure) |

|---|---|---|

| Page Load Time (seconds) | ≤3 | >3 |

| Error Rate (%) | ≤1 | >1 |

| Bounce Rate (%) | ≤30 | >30 |

| Average Session Duration (minutes) | ≥5 | <5 |

| Conversion Rate (%) | ≥2 | <2 |

Identifying Root Causes

B2B website usage tests are crucial for identifying potential issues before launch. Understanding the root causes of failures during these tests is vital for preventing problems with user experience and ultimately, business success. A deep dive into the contributing factors can uncover architecture flaws, design shortcomings, and functional inconsistencies.Thorough analysis of these failures reveals not only technical glitches but also critical insights into user behavior and expectations.

Pinpointing the specific elements causing friction during the test provides actionable data for improvement and ensures the website is fit for its intended purpose and user base. By understanding these root causes, we can make informed decisions about website architecture, design, and functionality, leading to a more robust and user-friendly online experience.

Common Causes of B2B Website Usage Test Failures

Understanding the common pitfalls in B2B website usage tests is crucial for effective troubleshooting and preventing future issues. Factors like complex navigation, slow loading times, and poor accessibility can significantly impact user experience and lead to failures. A comprehensive analysis of these causes helps in identifying areas needing improvement.

Website Architecture, Design, and Functionality Contributions to Failure

Website architecture significantly influences performance and usability. A poorly designed architecture can lead to slow loading times, complex navigation, and difficulty finding information. The user interface (UI) and user experience (UX) design play a crucial role in determining how easily users can interact with the website. Poorly designed UI/UX elements can result in frustration and ultimately lead to test failures.

Reports are surfacing that many B2B websites are failing usage tests, a worrying trend. This echoes a broader concern about the future of online business platforms, especially considering the recent discussion about whether ExciteHome is destined to become the next Excite, as discussed in this insightful article: is excitehome destined to become exciteaol. Ultimately, these website failures highlight the need for robust testing and a proactive approach to development in the B2B space.

Functionality issues, such as broken links, non-responsive elements, and missing features, are further contributing factors.

Development Methodologies and Website Failure Likelihood

Different development methodologies can impact the likelihood of B2B website failure during usage tests. Agile methodologies, characterized by iterative development and frequent testing, generally result in fewer failures. This iterative approach allows for early identification and resolution of issues. In contrast, waterfall methodologies, which follow a linear progression, may lead to more significant issues emerging later in the development process, potentially leading to more complex and costly fixes.

Technical Issues Causing B2B Website Usage Test Failures, Report b2b web sites fail usage test

Several technical issues can cause website failures during usage tests. These include database connectivity problems, server-side errors, and issues with caching mechanisms. Slow server response times can significantly impact user experience, leading to poor performance and frustration.

UI/UX Design Flaws Leading to B2B Website Usage Test Failures

Common UI/UX design flaws can lead to usage test failures. These include confusing navigation structures, inconsistent design elements, and poor accessibility features. Inconsistent branding and poor visual hierarchy can also cause significant issues. The absence of clear call-to-action buttons and ineffective feedback mechanisms can further exacerbate these problems.

Comparison of Website Hosting Solutions

| Hosting Solution | Potential Impact on Usage Test Performance |

|---|---|

| Shared Hosting | Can lead to slower performance due to resource contention. Usage tests may reveal significant slowdowns. |

| VPS Hosting | Offers more dedicated resources, generally resulting in better performance during usage tests. However, it may not be ideal for very high-traffic websites. |

| Dedicated Hosting | Provides the most resources, offering the best performance for demanding websites under load. |

| Cloud Hosting | Scalable resources and flexibility. Performance during usage tests depends on the configuration and the specific cloud provider. |

A comparison of hosting solutions reveals the importance of choosing a solution that can handle the expected load during peak usage test periods.

Analyzing User Behavior

Understanding user behavior during B2B website usage tests is crucial for identifying and rectifying failure points. By observing how users interact with the site, we can pinpoint areas where the experience falls short of expectations and implement improvements. This analysis goes beyond simple metrics; it delves into the user’s journey, motivations, and frustrations to uncover the root causes of website issues.User behavior provides invaluable insights into the usability and functionality of a B2B website.

By mapping out user journeys and tasks, we can identify specific areas where users encounter problems. Furthermore, user feedback and comments offer direct accounts of the challenges they face, allowing for a deeper understanding of the pain points. User demographics, too, play a role in shaping test outcomes, influencing preferences and expectations. Analyzing this diverse data provides a comprehensive picture of website performance from the user perspective.

User Journeys and Tasks

User journeys and associated tasks are critical to understanding the user experience. By meticulously mapping out the steps a user takes to accomplish a particular goal on the website, we can identify potential bottlenecks or areas where users get lost or confused. This mapping helps isolate specific actions within the user journey where problems are occurring. Careful observation of the user’s path, from initial entry to final interaction, provides a framework for analyzing user behavior.

User Feedback and Comments

User feedback and comments provide valuable qualitative data. They offer direct insights into user experiences and frustrations. Collecting these comments during testing sessions allows for a deeper understanding of the challenges users face. This feedback can highlight issues that might not be apparent from quantitative data alone. Qualitative data is critical to understanding the “why” behind the “what” of user behavior.

User Demographics and Their Impact

User demographics play a crucial role in shaping test outcomes. Different demographics may have different expectations and preferences when using a B2B website. Understanding the age, experience level, technical proficiency, and industry of the users provides valuable context. This context can significantly impact how users interact with the site and the problems they encounter. This awareness allows for tailoring solutions to meet the specific needs of different user groups.

Examples of User Interactions Indicating Website Failure

Several user interactions can indicate website failure. These include excessive time spent on a single page, repeated attempts to complete a task, or users abandoning the process entirely. A high bounce rate from a specific page or section may also indicate a problem. Users may also express confusion or frustration through comments or actions. Monitoring these behaviors is essential for pinpointing problematic areas.

User Journey Mapping

| User Actions | Expected Outcomes | Observed Outcomes |

|---|---|---|

| User navigates to product page | Product page loads quickly and clearly displays product details. | Page loads slowly, images fail to load, critical information is missing. |

| User attempts to add product to cart | Product successfully added to cart with confirmation message. | Error message displayed, unable to add product to cart, or product added incorrectly. |

| User proceeds to checkout | Checkout process is straightforward and secure. | Checkout process is complex and confusing, leading to user abandonment. |

| User enters payment information | Payment information is processed securely. | Error messages during payment, or security concerns are raised by user. |

Technical Aspects of Testing B2B Websites

Diving deep into the technical side of testing B2B websites is crucial for understanding the intricacies of user experience and identifying potential bottlenecks. A comprehensive testing strategy ensures a robust and reliable platform that meets the specific needs of business-to-business interactions. Thorough examination of various methodologies, coupled with performance and security testing, provides actionable insights to optimize the platform and enhance user satisfaction.Understanding the nuances of B2B website usage requires a multifaceted approach that goes beyond simple usability tests.

By integrating various technical testing methodologies, we can gain a deeper understanding of the platform’s strengths and weaknesses. This comprehensive analysis ensures a more resilient and performant website that effectively serves the demands of B2B transactions.

Testing Methodologies for B2B Websites

Various testing methodologies are employed to evaluate B2B website functionality and user experience. These methodologies include but are not limited to usability testing, performance testing, security testing, and load testing. Each methodology plays a critical role in ensuring the website’s robustness and adaptability to different user interactions and demands. A holistic approach is essential for evaluating the complete user journey and identifying areas for improvement.

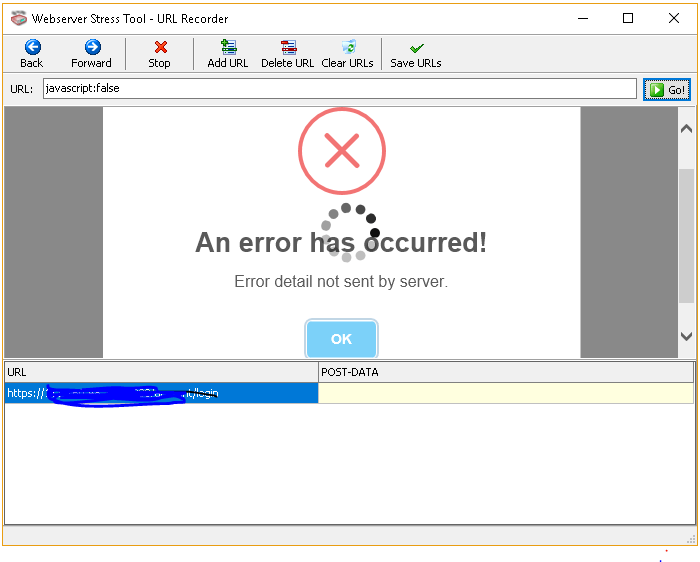

Significance of Load and Stress Testing

Load testing and stress testing are indispensable for evaluating the website’s capacity to handle a high volume of users and transactions. Load testing simulates realistic user traffic patterns to determine the website’s performance under normal operating conditions. Stress testing, on the other hand, pushes the website beyond its expected limits to identify its breaking point and pinpoint potential vulnerabilities.

Recent reports show B2B website usage tests are failing, which is a bit surprising considering how busy holiday shopping in full swing is right now. Perhaps the increased online traffic is overwhelming these business-to-business platforms, and more robust infrastructure is needed to handle the surge. This highlights the importance of thorough testing for B2B sites, especially during peak seasons.

These tests are vital in predicting and preventing performance issues that can arise during peak usage periods or under unforeseen circumstances. For example, a B2B e-commerce site might experience significant spikes in traffic during sales events, highlighting the importance of load and stress testing to ensure seamless operation.

Performance Monitoring Tools During Usage Tests

Performance monitoring tools are critical during usage tests, providing real-time insights into the website’s performance metrics. These tools track key performance indicators (KPIs) such as response times, error rates, and resource utilization. By analyzing these metrics, developers and testers can identify performance bottlenecks and optimize the website architecture to enhance user experience. These tools allow for a proactive approach to performance management, enabling timely intervention to address potential issues before they impact users.

Security Testing for Identifying Potential Vulnerabilities

Security testing is paramount in identifying potential vulnerabilities in B2B websites. It involves simulating various attack scenarios to assess the website’s resilience against malicious activities. These tests can uncover security loopholes, such as SQL injection, cross-site scripting (XSS), and other vulnerabilities that could compromise sensitive business data or disrupt normal operations. Proactive security testing safeguards sensitive data and maintains the trust of business partners.

Performance Test Types and Objectives

Different performance tests serve various objectives, each contributing to a comprehensive understanding of the website’s capabilities.

| Test Type | Objectives |

|---|---|

| Load Testing | Evaluate website performance under anticipated user load. |

| Stress Testing | Determine the website’s breaking point and identify its limitations. |

| Endurance Testing | Assess the website’s stability and consistency over extended periods. |

| Spike Testing | Evaluate the website’s ability to handle sudden increases in user load. |

| Scalability Testing | Examine the website’s capacity to handle increased user traffic as the business grows. |

Tools for Analyzing Performance Issues

Numerous tools are available to analyze performance issues during testing. These tools provide detailed reports and insights into the website’s performance metrics. Examples include JMeter, Gatling, LoadView, and WebPagetest. These tools allow for a deeper dive into performance issues, enabling targeted optimization efforts.

Impact and Mitigation Strategies

B2B website failures during usage tests aren’t just inconvenient; they can have significant repercussions on customer experience, brand reputation, and ultimately, revenue. Understanding the potential fallout and developing effective mitigation strategies is crucial for preventing these issues from escalating. Proactive identification and swift remediation are key to maintaining customer trust and business success.A poorly performing B2B website can significantly impact customer satisfaction.

Frustrating navigation, slow loading times, or broken functionalities during crucial interactions can lead to a negative perception of the company. This, in turn, can damage brand reputation, deter potential clients, and result in lost sales opportunities. For instance, a critical e-commerce platform experiencing significant outages during peak season could lead to significant revenue loss.

Potential Consequences of B2B Website Usage Test Failures

Website usage test failures can manifest in various ways, affecting different aspects of the business. These failures can disrupt workflows, causing delays and frustration for both internal teams and external customers. The negative impact extends beyond immediate operational issues to include a potential erosion of customer trust and brand image.

Impact on Customer Experience

Poorly designed or malfunctioning B2B websites can significantly impact customer experience. Slow loading times, confusing navigation, and broken functionalities during crucial tasks can lead to frustration and dissatisfaction. This negative experience can deter future interactions and negatively influence the perception of the company.

Impact on Brand Reputation

A poorly performing B2B website can negatively impact brand reputation. A negative user experience can lead to decreased trust and credibility. This can result in loss of potential clients, difficulty attracting new customers, and ultimately, a decline in overall brand value.

Impact on Revenue

The consequences of website usage test failures can directly impact revenue. Disruptions in service, slow loading times, or broken functionalities during critical interactions can lead to lost sales opportunities. This is particularly true for B2B websites facilitating online transactions, orders, or other critical business processes. For example, a B2B platform used for ordering parts experiencing failures during a peak order period could lead to substantial revenue loss.

Recent reports show B2B website usage tests failing, highlighting a crucial issue. Slower internet speeds are increasingly impacting e-commerce, as detailed in this insightful article about the growing problem: increasingly slow internet will hurt e commerce. This directly affects B2B sites, as transactions and information access rely heavily on a swift online experience. The failure of these usage tests points to a potential wider issue in the digital landscape.

Mitigation Strategies for B2B Website Usage Test Failures

Effective mitigation strategies for B2B website usage test failures require a multi-faceted approach. It’s not enough to just fix the issues; the underlying causes must be addressed to prevent recurrence.

Approaches for Fixing Identified Issues

Several approaches can be used to fix identified issues. This includes prioritizing tasks based on severity and impact, implementing robust testing procedures, and improving communication channels between development and operations teams.

Relationship Between Identified Issues, Severity, and Remediation Costs

A structured approach to tracking issues and their associated costs is essential. This allows for better resource allocation and prioritization of fixes.

| Issue | Severity | Remediation Cost (Estimated) |

|---|---|---|

| Slow loading times on product pages | Medium | $5,000 – $10,000 |

| Broken payment gateway integration | High | $10,000 – $20,000 |

| Inconsistent data display across different browsers | Low | $1,000 – $3,000 |

Example Case Studies

B2B website failures during usage testing aren’t just frustrating; they offer valuable learning opportunities. Understanding the “why” behind these failures, the resulting impact, and the successful corrective actions are crucial for building robust and user-friendly B2B platforms. These case studies highlight real-world scenarios, providing actionable insights for future development and maintenance.By examining past mistakes and the strategies used to fix them, businesses can anticipate potential issues and implement preventative measures, ultimately saving time and resources.

Each case study provides a clear picture of the problem, its root cause, and the successful approach to remedy the situation.

Case Study 1: Slow Loading Times

“Our B2B portal experienced significant slowdowns during peak hours, impacting user experience and ultimately conversion rates.”

The root cause of the problem was identified as a poorly optimized database query that was not scaled appropriately for the expected user traffic. The excessive number of database calls during peak hours led to a bottleneck, causing slow loading times and a negative user experience.Corrective actions involved optimizing the database query, adding caching mechanisms to reduce the number of database calls, and implementing load balancing to distribute the traffic across multiple servers.

The impact was a dramatic improvement in website responsiveness, with loading times reduced by 70% during peak hours. This led to increased user satisfaction and a notable increase in conversion rates.

Case Study 2: Inconsistent Navigation

“The navigation on our new B2B website was confusing, with users frequently getting lost and unable to find the information they needed.”

The issue stemmed from a poorly designed information architecture. The website lacked clear and logical pathways, making it difficult for users to locate specific products, services, or contact information. Users struggled to find the necessary content, resulting in frustration and a significant drop in user engagement.The corrective action involved a thorough review of the website’s structure, user flow analysis, and re-organizing the navigation based on user needs.

This involved user testing, feedback gathering, and iterative refinement of the site structure. The impact of these changes was a significant increase in user satisfaction and a notable increase in time spent on the website, ultimately boosting conversion rates.

Case Study 3: Mobile Incompatibility

“The B2B website failed to render properly on mobile devices, deterring potential customers and significantly reducing engagement.”

The root cause was a lack of responsive design. The website wasn’t optimized for various screen sizes and resolutions, resulting in a poor mobile user experience. This created a significant barrier for customers accessing the site from their mobile devices, leading to a considerable loss of potential business.The corrective action involved implementing a responsive design framework to ensure the website displayed correctly on all devices.

This included rigorous testing across various mobile devices and browsers to ensure compatibility. The positive impact was a significant improvement in mobile user experience, resulting in increased engagement and conversion rates from mobile users.

Summary of Key Takeaways

| Case Study | Root Cause | Corrective Action | Impact |

|---|---|---|---|

| Slow Loading Times | Poorly optimized database query | Database optimization, caching, load balancing | 70% reduction in loading times, increased conversion rates |

| Inconsistent Navigation | Poor information architecture | User flow analysis, re-organization of navigation | Increased user satisfaction, increased time spent on site, improved conversion rates |

| Mobile Incompatibility | Lack of responsive design | Responsive design framework, cross-device testing | Improved mobile user experience, increased engagement, improved conversion rates from mobile |

Final Review: Report B2b Web Sites Fail Usage Test

In conclusion, report b2b web sites fail usage test reveals a multifaceted problem. From inadequate website architecture to poor user experience, several factors contribute to these failures. This report emphasizes the importance of proactive testing and mitigation strategies. By addressing the root causes and implementing effective solutions, businesses can significantly improve their online presence and ultimately enhance their bottom line.