Privacy issue wont go away is profiling stereotyping – Privacy issue won’t go away is profiling stereotyping. From historical anxieties about surveillance to today’s sophisticated data collection, concerns about our personal information persist. This persistent issue isn’t just about the technology; it’s deeply rooted in societal values and power dynamics. The ever-increasing volume of data, coupled with powerful algorithms, creates new avenues for profiling and stereotyping, potentially leading to biased outcomes.

We’ll explore how data collection, analysis, and breaches impact privacy, examine strategies for addressing these concerns, and consider the future of privacy in a digital world.

The persistent nature of privacy issues is further complicated by the increasing volume and accessibility of data. Data breaches and misuse pose significant risks to individual privacy, raising ethical questions about data collection and analysis practices. Anonymization and encryption are important tools to mitigate privacy risks, but addressing the root causes of profiling and stereotyping requires a multi-faceted approach involving individuals, companies, and policymakers.

Different cultures and demographics also have varying levels of concern and different interpretations of privacy rights.

Understanding the Persistence of Privacy Concerns

The digital age has ushered in unprecedented levels of interconnectedness, yet simultaneously amplified anxieties about privacy. This tension isn’t new; concerns about personal information have been a recurring theme throughout history, evolving in tandem with societal structures and technological advancements. Understanding this enduring concern requires examining its historical roots, societal drivers, and technological impacts.Privacy is not a static concept; its meaning and importance have shifted dramatically across different eras.

Early forms of privacy often revolved around physical space and the protection of personal belongings. As societies became more complex, the need for legal and institutional frameworks to safeguard individual information grew. Today, privacy concerns encompass not only physical and digital spaces but also the vast and constantly expanding networks of data collection and analysis.

Historical Overview of Privacy Concerns

Privacy concerns have existed throughout history, manifesting in different forms depending on the societal structures and technological capabilities of each era. In ancient civilizations, the concept of personal space and the right to control information about oneself was already present, albeit in a less formalized way than in modern times. The evolution of privacy law and policy in the 20th and 21st centuries reflects the increasing sophistication of data collection and processing techniques.

Societal Factors Contributing to Enduring Privacy Issues

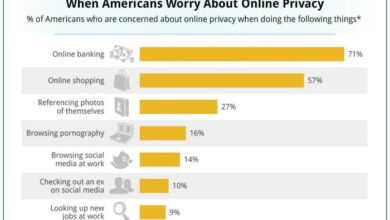

Several societal factors contribute to the ongoing debate about privacy. The rise of mass media and surveillance technologies, coupled with a growing distrust of institutions, has amplified public anxieties. Concerns about government overreach, corporate data practices, and the potential for misuse of personal information have become pervasive. Cultural norms regarding data sharing and individual autonomy also vary significantly, further complicating the issue.

Technological Advancements and Their Impact on Privacy

Technological advancements have both enhanced and threatened privacy. The proliferation of social media, smartphones, and the internet has made it easier to connect with others, but it has also created new avenues for data collection and potential misuse. Data analytics and artificial intelligence are increasingly capable of revealing intricate details about individuals, raising questions about the boundaries of acceptable data usage.

For instance, facial recognition technology, while offering potential benefits, also raises serious concerns about mass surveillance.

Interrelation Between Privacy Concerns and Societal Values

Privacy concerns are deeply intertwined with societal values. Values such as individual autonomy, freedom of expression, and the right to self-determination are central to the debate about privacy. A society that values individual liberty will likely place a higher emphasis on privacy protections than one that prioritizes collective security. The balance between these values is constantly being negotiated in the face of new technological capabilities.

Comparison of Privacy Concerns Across Cultures and Demographics

| Culture/Demographic | Key Privacy Concerns | Specific Examples |

|---|---|---|

| Western Cultures | Focus on individual rights and data protection. | Stricter regulations on data collection and use, emphasis on consent. |

| Eastern Cultures | Balancing individual needs with collective interests. | Greater emphasis on communal values, potential for less stringent individual privacy laws. |

| Low-income Communities | Concerns about access to services and the potential for discrimination. | Data collected about financial situations, health status could disproportionately impact access to resources. |

| High-income Communities | Concerns about the security of sensitive information and potential financial exploitation. | Concerns about sophisticated data breaches and targeted phishing schemes. |

The table above provides a simplified overview; the nuances and complexities within each category are significant. It is important to acknowledge that these categories are not mutually exclusive and that overlapping concerns exist across diverse cultural and demographic groups.

Profiling and Stereotyping as Privacy Threats

The digital age has brought unprecedented access to vast amounts of data, enabling sophisticated profiling and stereotyping. While this data can be valuable for targeted advertising and personalized services, its misuse can lead to significant privacy violations and societal harm. The insidious nature of these practices lies in their ability to reinforce pre-existing biases and create self-fulfilling prophecies, potentially leading to discriminatory outcomes.Profiling and stereotyping undermine privacy by reducing individuals to simplified representations based on limited data points.

These representations often fail to capture the complexity and diversity of human experience, potentially leading to unfair or inaccurate assessments. The consequences can range from biased loan applications to denied employment opportunities, highlighting the severe implications of these practices.

Mechanisms of Undermining Privacy

Profiling and stereotyping often occur through the automated analysis of personal data, often without transparency or meaningful human oversight. Algorithms, trained on datasets that reflect existing societal biases, can perpetuate and amplify these biases. This can lead to discriminatory outcomes, even when the algorithms are seemingly neutral. Furthermore, the lack of transparency in these processes makes it difficult for individuals to understand how they are being profiled or why they are being treated differently.

This lack of understanding further erodes trust and exacerbates privacy concerns.

Negative Consequences of Biased Profiling

The negative consequences of biased profiling and stereotyping are far-reaching and can affect individuals and communities in numerous ways. For instance, individuals might face denial of credit, higher insurance premiums, or even biased criminal justice outcomes based on their profiles. Communities may experience increased marginalization, perpetuating cycles of disadvantage. Furthermore, the erosion of trust in institutions and systems can have significant long-term effects on social cohesion and stability.

Role of Algorithms and Data Collection

Algorithms, trained on vast datasets, can inadvertently reflect and amplify existing societal biases. This occurs when the data used to train these algorithms contains disproportionate representation of certain groups or when the algorithms themselves are designed in ways that favor certain outcomes. For example, an algorithm used to assess loan applications might be trained on historical data that shows a higher default rate among a specific demographic group, leading to an unfairly higher risk assessment for members of that group.

This reinforces existing stereotypes and can perpetuate systemic inequality. The automated nature of these processes often obscures the decision-making process, making it challenging to identify and address potential biases.

Examples of Discriminatory Outcomes

Examples of discriminatory outcomes arising from profiling and stereotyping are numerous and concerning. Targeted advertising that reinforces harmful stereotypes, biased loan applications, and biased criminal justice outcomes are some of the direct consequences. Furthermore, individuals might face denial of employment opportunities or housing based on inaccurate profiles created from limited data. These examples highlight the need for greater transparency and accountability in data collection and algorithmic decision-making.

Data Types and Profiling

| Data Type | Profiling Examples |

|---|---|

| Location Data | Predicting criminal activity based on past crime statistics in specific areas; profiling users based on their frequent locations. |

| Purchase History | Creating profiles for targeted advertising based on past purchases; using purchasing habits to assess creditworthiness or financial stability. |

| Social Media Activity | Creating profiles of users based on their online interactions and relationships; using social media data to assess political or social views. |

| Medical Records | Predicting health risks based on past diagnoses and treatment patterns; using medical data for targeted insurance pricing. |

This table demonstrates how different types of data can be used to create profiles that can lead to discriminatory outcomes. The potential for misuse and bias is evident in each case. Addressing these issues requires a multifaceted approach that combines technical solutions with ethical guidelines.

The Interplay of Data and Privacy

The digital age has ushered in an era of unprecedented data collection and accessibility. This deluge of information, while offering immense potential for progress, simultaneously raises significant privacy concerns. We are constantly generating data, from our online browsing habits to our location tracking, creating a vast and interconnected digital footprint. Understanding how this data interacts with our privacy is crucial for navigating this evolving landscape.The increasing volume and accessibility of data fuel privacy concerns by making it easier to collect, store, and analyze personal information on a massive scale.

This ability to amass and process vast datasets creates both opportunities and vulnerabilities. The potential for misuse, whether through intentional breaches or unintended consequences of analysis, becomes more pronounced as the quantity of data grows. The interconnectedness of data points also means that seemingly innocuous information can be linked and interpreted in ways that reveal sensitive details about individuals.

Data Breaches and Privacy Violations

Data breaches, intentional or accidental, represent a significant threat to individual privacy. These incidents can expose sensitive personal information, such as financial details, medical records, and personal identifiers, leading to identity theft, financial losses, and emotional distress. The consequences of data breaches can extend far beyond the immediate victims, affecting entire communities and organizations. For example, a breach at a healthcare provider could expose the medical records of thousands of patients, jeopardizing their health and well-being.

A breach at a financial institution could lead to widespread fraud and financial hardship for countless individuals.

Ethical Implications of Data Collection and Analysis

Data collection and analysis practices must be approached with ethical considerations. The potential for bias in algorithms and the use of data for discriminatory purposes are serious concerns. Data profiling, often used for targeted advertising or credit scoring, can lead to unfair or discriminatory outcomes. For example, if an algorithm used for loan applications is trained on data that reflects existing societal biases, it may perpetuate those biases and deny credit to certain demographics.

Furthermore, the lack of transparency in data analysis practices can make it difficult to understand how personal information is being used and potentially exploited.

Data Anonymization and Encryption Techniques

Data anonymization and encryption are crucial tools for mitigating privacy risks. Anonymization techniques remove identifying information from datasets, while encryption methods protect data during transmission and storage. By obscuring identifying characteristics, anonymization makes it more difficult to link data back to specific individuals. Encryption, on the other hand, protects sensitive information from unauthorized access. These techniques can be combined to create robust privacy safeguards.

Privacy issues, unfortunately, aren’t going anywhere; they’re deeply intertwined with profiling and stereotyping. Even with the seemingly boundless opportunities of e-commerce, like no fear of e commerce , the underlying data collection practices can still lead to biased outcomes. This constant surveillance, even in seemingly innocuous online interactions, reinforces existing societal biases, and ultimately, the privacy issue won’t go away.

For example, data can be anonymized and then encrypted to protect the privacy of individuals while still allowing for valuable data analysis.

Data Protection Regulations Worldwide

| Regulation/Law | Region | Key Focus |

|---|---|---|

| General Data Protection Regulation (GDPR) | European Union | Individual rights, data minimization, transparency, and data security. |

| California Consumer Privacy Act (CCPA) | California, USA | Consumer rights regarding their personal information, including the right to access, delete, and control their data. |

| Data Protection Act 2018 (DPA) | United Kingdom | Protecting personal data and ensuring fair and lawful processing. |

| Personal Information Protection and Electronic Documents Act (PIPEDA) | Canada | Protecting personal information held by organizations in the private sector. |

These regulations and laws are designed to address the challenges posed by data privacy in the digital age. They Artikel specific rules and requirements for data collection, processing, and security. By adhering to these regulations, organizations can demonstrate their commitment to safeguarding individual privacy. Understanding the specific requirements of these laws is critical for organizations operating globally.

Strategies for Addressing Privacy Concerns: Privacy Issue Wont Go Away Is Profiling Stereotyping

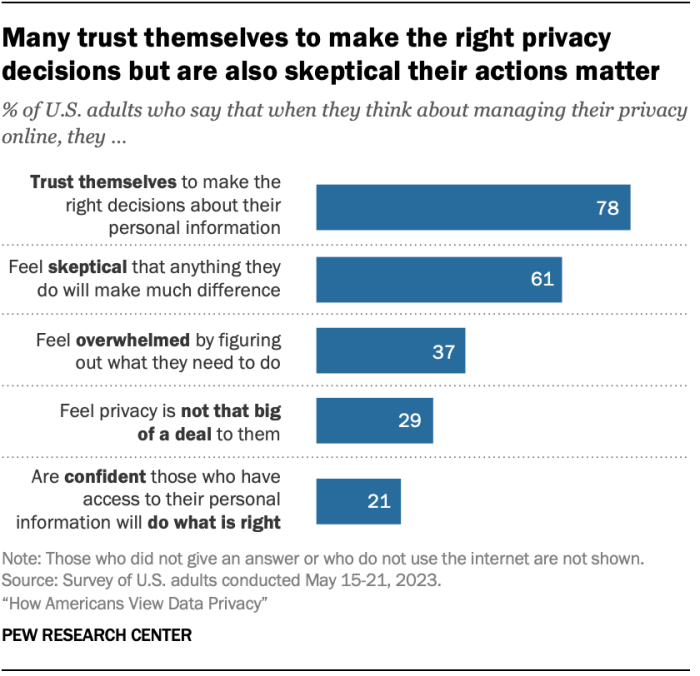

Protecting personal information in the digital age is paramount. The increasing reliance on data, coupled with the potential for misuse and discrimination, necessitates proactive strategies to safeguard privacy. These strategies must encompass a multifaceted approach, involving robust data security measures, public education initiatives, and clear policy frameworks.Data security is not a static concept; it’s a dynamic process that requires continuous adaptation.

Privacy issues, unfortunately, aren’t going away anytime soon. Profiling and stereotyping are deeply ingrained in the way data is collected and used, making the potential for bias and discrimination ever-present. This is directly tied to the concept of the grand unified database theory , which suggests that a single, comprehensive database could potentially exacerbate these problems, leading to even more nuanced and harmful forms of bias.

The result is a vicious cycle, where the pursuit of “perfect” data leads to an inescapable privacy problem.

The techniques for ensuring data integrity and confidentiality must evolve alongside emerging threats and technologies. Robust security protocols are vital to prevent unauthorized access, misuse, and breaches of sensitive information.

Improving Data Security and Privacy, Privacy issue wont go away is profiling stereotyping

Data breaches are a significant concern. Implementing strong encryption protocols, multi-factor authentication, and regular security audits can mitigate the risk of unauthorized access. Regular vulnerability assessments, rigorous incident response plans, and adherence to industry best practices are crucial. For instance, companies like Google and Facebook invest heavily in security measures to protect user data. These efforts aim to protect sensitive information from malicious actors.

Examples of Successful Privacy Initiatives

Several organizations have demonstrated effective strategies for protecting user privacy. For example, the California Consumer Privacy Act (CCPA) empowers individuals with greater control over their personal data. Similar regulations are emerging globally. The GDPR (General Data Protection Regulation) in Europe also highlights the growing emphasis on user rights and data protection. These regulations and initiatives demonstrate a shift towards a more user-centric approach to data management.

Educating the Public About Privacy Risks and Solutions

Public awareness is critical to fostering a culture of privacy. Educational campaigns can highlight the potential risks associated with data breaches and the importance of responsible data handling. These campaigns can be implemented through various channels, such as educational materials, workshops, and online resources. Clear and accessible explanations of privacy policies and user rights are essential for informed decision-making.

The Role of Policymakers in Addressing Privacy Issues

Policymakers play a critical role in shaping privacy standards and regulations. Clear guidelines and regulations on data collection, storage, and use are vital. Legislation should address issues like profiling and stereotyping to prevent discrimination and ensure fairness. Effective policies must be adaptable to the evolving landscape of data usage and technological advancements. International cooperation and harmonization of data protection laws are also crucial.

Comparing and Contrasting Data Governance and Regulation

Different approaches to data governance and regulation exist globally. Some regions prioritize a rights-based approach, focusing on empowering individuals with control over their data. Others adopt a risk-based approach, focusing on identifying and mitigating potential risks. A balanced approach, incorporating elements of both, is likely to be most effective. Regulations such as the GDPR emphasize user rights, while others focus on industry standards.

Comparing these approaches reveals the need for diverse and context-specific solutions. The ideal approach may differ depending on the industry, the type of data being handled, and the specific legal and ethical considerations.

Illustrative Examples of Privacy Violations

The digital age has brought unprecedented convenience and connection, but it has also exposed us to new and insidious privacy threats. Data breaches, often involving sensitive personal information, are a stark reminder of the vulnerabilities inherent in our interconnected world. These breaches can have devastating consequences, affecting individuals’ financial security, reputation, and emotional well-being. Understanding these violations is crucial to developing effective strategies for safeguarding our privacy.

A Recent Data Breach Example: The 2022 Facebook Data Breach

In 2022, Facebook, now Meta, faced a significant data breach that compromised the personal information of millions of users. The breach exposed a vast amount of user data, including names, email addresses, phone numbers, and in some cases, financial information. This data leak highlighted the vulnerability of centralized platforms and the potential for malicious actors to exploit these systems for personal gain.

Profiling and Stereotyping in Data Breaches

The Facebook breach, while not explicitly targeting specific groups, demonstrates how seemingly innocuous data can be used for profiling and stereotyping. Imagine the potential for advertisers or even malicious actors to use this data to create profiles of individuals based on their browsing history or interests. Such profiles could then be used to target individuals with tailored advertisements or even to create prejudiced judgments, potentially leading to discriminatory outcomes.

Company Responses to Privacy Concerns: A Case Study of Equifax

In 2017, Equifax, a major credit reporting agency, suffered a massive data breach exposing the personal information of over 147 million people. This incident prompted a significant public outcry and scrutiny of the company’s data security practices. Equifax’s response involved a combination of financial settlements and an attempt to enhance data security measures. However, the incident underscored the need for companies to prioritize data security and transparency, as well as to respond swiftly and effectively to breaches.

The company’s response, while initially criticized, was a crucial step towards improving data protection measures and enhancing consumer trust.

Legal Ramifications of Privacy Violations

Data breaches often result in legal action, including class-action lawsuits, regulatory investigations, and fines. The legal consequences of such violations can be substantial, ranging from hefty financial penalties to reputational damage. Individuals and organizations affected by breaches may seek compensation for damages incurred. For instance, the Equifax breach led to numerous lawsuits, and the company was forced to pay substantial sums in settlements.

Privacy issues, like profiling and stereotyping, unfortunately, aren’t going anywhere. Even as companies like Hastings expand their e-commerce presence, hastings expands e commerce presence , the underlying data collection and analysis that fuels these services raise questions about how our personal information is used and potentially misused. The core problem of bias in algorithms and data sets remains, regardless of technological advancements.

The legal battles arising from data breaches often set important precedents and influence future data security regulations.

Summary Table of Privacy-Related Lawsuits and Controversies

| Company/Entity | Violation Type | Affected Parties | Legal Outcomes |

|---|---|---|---|

| Facebook (2022) | Data Exposure | Millions of users | Ongoing investigations and public scrutiny |

| Equifax (2017) | Massive Data Breach | Over 147 million people | Class-action lawsuits, financial settlements, and regulatory action |

| Target (2013) | Point-of-Sale Data Breach | Millions of customers | Financial settlements, and improved security protocols |

This table provides a concise overview of some significant privacy violations. Each case highlights different facets of privacy issues and the importance of robust data protection measures. It also underscores the evolving nature of privacy concerns in the digital age.

Potential Future Trends and Developments

The digital age continues its relentless march forward, transforming how we live, work, and interact. As technology advances, so do our privacy concerns, demanding a proactive and adaptable approach to safeguard personal information. Understanding the potential evolution of these concerns is crucial to fostering a future where privacy is not just a right, but a reality.

Likely Evolution of Privacy Concerns in the Digital Age

Privacy concerns in the digital age will likely become more multifaceted and intertwined with emerging technologies. The sheer volume of data generated, collected, and analyzed will increase exponentially, leading to more sophisticated methods of profiling and targeting individuals. Concerns about data breaches, misuse of personal information, and algorithmic bias will persist and likely escalate in intensity.

Anticipated Impact of Emerging Technologies on Privacy Rights

Emerging technologies, such as the Internet of Things (IoT), artificial intelligence (AI), and blockchain, present both opportunities and challenges to privacy rights. The proliferation of interconnected devices collecting vast amounts of data about our daily routines raises concerns about the scope and extent of data collection. The use of AI in decision-making processes, such as loan applications or hiring, may perpetuate existing biases or create new ones, impacting individuals unfairly.

The potential for blockchain technology to enhance transparency and security in data handling must be balanced with concerns about the potential for data misuse or unintended consequences.

Artificial Intelligence and Machine Learning to Address Privacy Issues

AI and machine learning offer potential solutions to privacy challenges. AI-powered systems can be designed to detect and prevent data breaches, analyze patterns of data usage to identify potential misuse, and even anonymize data to protect individual privacy. However, the development and deployment of such systems must be accompanied by rigorous ethical considerations to avoid reinforcing existing biases or creating new ones.

Moreover, the potential for AI systems to learn and adapt to evolving privacy threats requires ongoing monitoring and evaluation.

New Privacy Challenges in the Future

New privacy challenges will emerge as technology evolves. The increasing use of virtual reality (VR) and augmented reality (AR) technologies will raise questions about the collection and use of data about user behavior in these immersive environments. The potential for deepfakes and synthetic media to spread misinformation and manipulate individuals will also necessitate new approaches to safeguarding privacy and combating the spread of disinformation.

Potential Future Regulatory Frameworks for Data Protection

A table outlining potential future regulatory frameworks for data protection is provided below:

| Framework Category | Description | Potential Challenges |

|---|---|---|

| Global Harmonization | Establishing common data protection standards across different jurisdictions. | Achieving consensus on key principles, balancing global competitiveness with privacy protection. |

| AI-Specific Regulations | Developing regulations tailored to the unique data protection concerns posed by AI systems. | Defining clear criteria for AI fairness, transparency, and accountability. |

| Data Minimization and Purpose Limitation | Stricter regulations enforcing the principle of collecting only necessary data for specific, stated purposes. | Balancing the need for data with potential limitations on research, innovation, and business operations. |

| Data Subject Rights Empowerment | Giving individuals greater control over their data, including access, rectification, and erasure rights. | Ensuring practical implementation and enforcement of these rights in a technologically advanced environment. |

Last Point

In conclusion, the privacy issue won’t go away, and the interplay between profiling, stereotyping, and data is a complex and evolving challenge. We’ve seen how historical anxieties persist in the digital age, with new threats arising from advanced technologies. While data anonymization and encryption can help, a fundamental shift in our approach to data governance and regulation is needed.

Protecting our privacy requires a collaborative effort involving individuals, businesses, and policymakers to address the ethical and legal implications of data collection and usage. The future of privacy will depend on our ability to adapt to emerging technologies while upholding our fundamental right to privacy.